Journey to High-Concurrency Reliability

A fast-growing real-time fantasy sports and betting platform, faced complex engineering challenges as it scaled to support thousands of concurrent bettors across multiple global markets. From service outages triggered by database bottlenecks to latency during high-traffic sports events, the platform struggled to meet performance, resilience, and delivery demands.

Axelerant partnered with the platform to overhaul its backend systems, streaming infrastructure, aggregation pipelines, and delivery processes. Together, the teams re-engineered the platform to support low-latency APIs, efficient streaming, secure AWS environments, and a component-based delivery culture, unlocking the ability to support 20,000+ concurrent markets with minimal operational overhead.

About the Customer

The project involves a next-generation fantasy gaming and betting platform that serves users across global markets in real-time. Built to operate under the intense pressure of live sporting events, the platform delivers market updates, transactional APIs, and analytics with millisecond precision.

The business is built on velocity and scale, where user trust is deeply tied to application reliability and speed during peak concurrency.

- 20000+

Concurrent Live Markets

- 3,000+

Concurrent Bettors

- 250+

Concurrent Agents

The Challenge

The platform's explosive growth introduced high-stakes engineering and delivery challenges:

API Latency And Infrastructure Load

- Unpredictable spikes during sports events led to latency above acceptable thresholds (P99 > 2s), compromising core user workflows.

- Backend throughput plateaued at ~140 RPS, blocking user scale and stressing transactional endpoints.

- Database-level deadlocks triggered cascading service outages due to unbounded query payloads.

Streaming System Bottlenecks

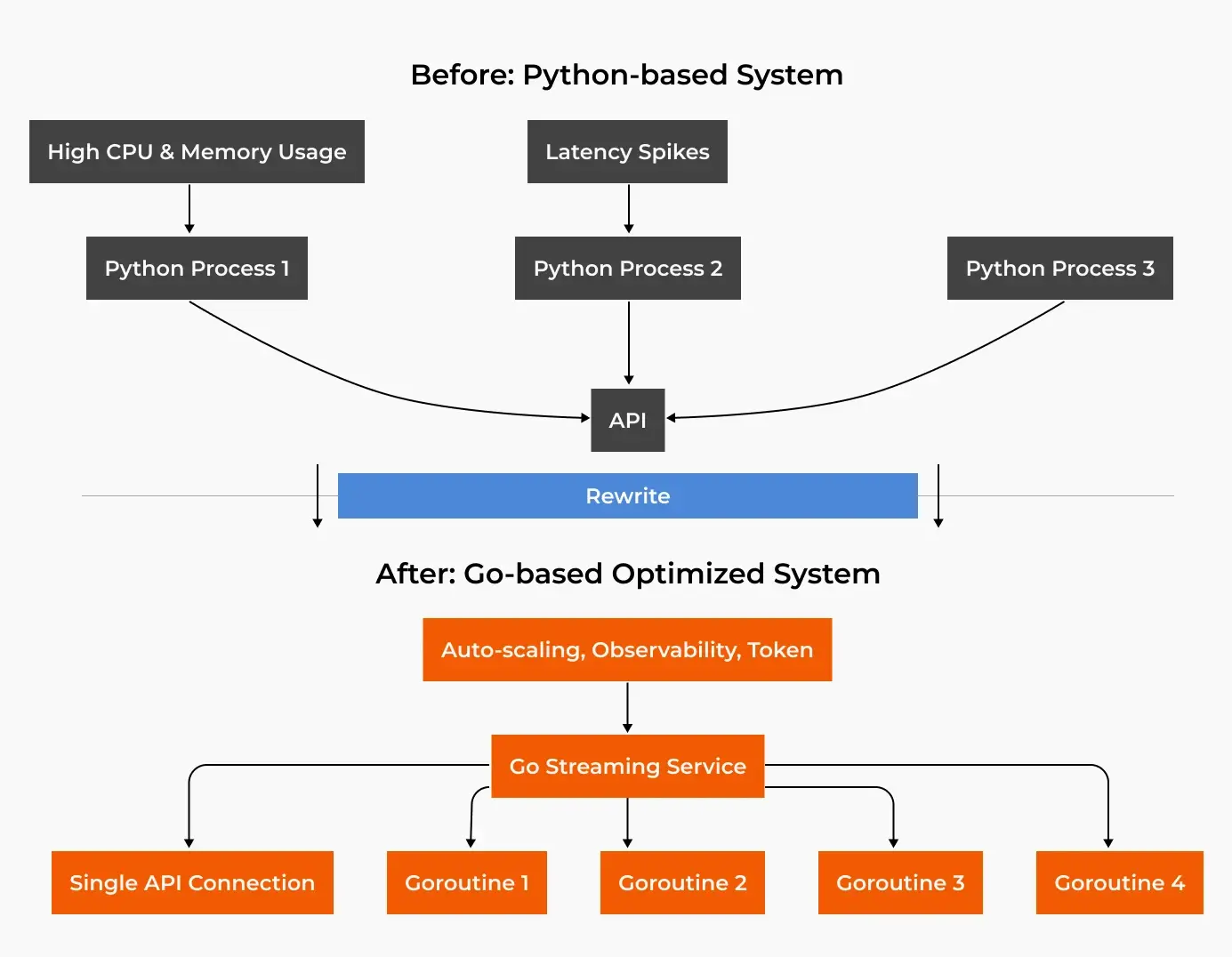

- Python-based independent streaming processes created memory bloat, CPU exhaustion, and deployment friction.

- Markets often failed to update in time, especially when supporting 20K+ market live feeds.

Platform Fragility And Incident Recovery Gaps

- Service-wide outages were triggered by silent proxy bypass, logging overloads, and lack of monitoring guardrails.

- Operational runbooks and observability pipelines were insufficient for root cause analysis.

Multi-Level Data Aggregation Failure

- Dashboard APIs triggered recursive joins across user hierarchies, causing long query times and data inconsistencies.

- Real-time visibility into betting PnL was inconsistent and unstable.

Delivery Model Constraints

- Feature delivery was unpredictable post-MVP due to siloed responsibilities and backend/frontend misalignment.

- Rework and missed expectations increased due to late integration and fragmented QA cycles.

Experience Bottlenecks On Login

- The platform’s first-touch user flow suffered from large bundle sizes, long FCP/LCP, and blocking tasks.

- Lighthouse scores dropped below 70, impacting SEO and user retention.

The Solution

Axelerant deployed a comprehensive engineering-first transformation, re-architecting core services, infrastructure, and team workflows.

Cloud-Native Platform With Kubernetes And Golang

- Built a fully containerized platform on Kubernetes with namespaced isolation, pod-level scaling, and GitOps workflows via ArgoCD and Helm.

- Rewrote Python services in Golang, cutting CPU usage from 6–8 cores to <1 core and memory from 16 GB to <500 MB per pod.

- Added Redis ElastiCache, PostgreSQL with failover, and Kafka with persistent topics for real-time message streams.

- Enhanced autoscaling logic via Karpenter and PerfectScale; enabled observability using OpenTelemetry.

Streaming Rewrite For 15x Efficiency

- Replaced fragmented Python processes with a unified Go service using goroutines and persistent WebSocket connections.

- Reduced memory usage from 6 GB → 150 MB and CPU from 2.5 cores → 0.1 core.

- Added configurable goroutine pools, message queue backpressure controls, and fault isolation mechanisms.

- Introduced streaming observability with Prometheus, Loki, and custom Grafana dashboards.

Database Deadlock Mitigation And Recovery Engineering

- Applied query payload governance, setting upper bounds on joins, subqueries, and sort parameters.

- Added retry-safe transactional workflows and async processors for slow writes.

- Designed incident recovery playbooks with pinned session flushing, log pruning, and failover management.

Multi-Account AWS Control Tower Setup

- Transitioned to an AWS Control Tower model, segmenting dev, staging, and production environments.

- Integrated Google Workspace with AWS SSO to manage role-based access across developer, product, and quality teams.

- Applied SCPs, CloudTrail, and VPC Flow Logs to enforce security compliance.

- Set up Tailscale VPN routing and API firewalling for restricted services.

Feature-First Engineering Model

- Formed stable team pods around modular components like user management, configuration, and payouts.

- Shifted to API-first design—frontend and QA teams worked with mocked contracts before backend merges.

- Eliminated partial testing: a component entered QA only when fully integrated.

- Each sprint included demo-ready components with working frontend-backend logic.

Load Testing And Continuous Performance Loops

- Built load testing pipelines using k6 to simulate more than 2000 concurrent bettors across large set of market types.

- Detected bottlenecks in transactional APIs with 19s response times; reduced to <250ms via query profiling and caching.

- Instrumented latency and error budgets across critical endpoints using P95/P99 metrics and OpenTelemetry.

- Enabled real-time autoscaling tied to event rate and request patterns.

PnL Aggregation With Kafka And Golang

- Replaced synchronous, recursive dashboard queries with an event-driven architecture using Kafka and Golang.

- Implemented durable, Redis-backed event processing with pre-aggregation on key actions (bets placed, odds updated, user hierarchy changes) using hierarchical sync queues and DLQ processors.

- Dashboard load times dropped from 6–8s to sub-1s, even under high traffic.

Login Performance Overhaul

- Lighthouse scores increased to 99, FCP dropped from 14.5s to 4.97s, and long tasks were eliminated.

- Refactored UI structure for accessibility, speed, and better LCP distribution.

The Result

The implemented solutions resulted in several enhancements:

89.13% API Latency Reduction

- Before: P99 latencies for key APIs exceeded 2.3s, with backend throughput capped around 140 RPS.

- After: Optimized service orchestration, query design, and caching pushed P99 below 250ms, and throughput increased to 560+ RPS, confirmed under controlled load.

40x Streaming Memory Efficiency

- Rewriting from Python to Go reduced the memory per process from 6 GB to 150 MB.

- Consolidation into a single streaming connection removed fragmentation, enabling scale to 20K concurrent feeds.

57% Faster Login Loads

- Login bundle shrank from 258kB to 115kB after replacing AntD with Panda CSS and Formik.

- Lighthouse score improved from 86 to 99.

- FCP/LCP dropped from 14.5s to 4.97s, eliminating all blocking tasks and improving SEO as well as UX.

80% Drop In Incident Frequency During Peak Events

- Payload governance, structured logging, and runbooks with automated flushing reduced production incident rates by over 80% during high-traffic sporting events.

- Prior to this, oversized payloads and logging surges frequently caused cascading failures across services.

Sub-250s Aggregated PnL Dashboard Loads

- Admin dashboards that previously took 6–8s to compute nested user earnings now load in <250ms via pre-computed cache layers.

- Kafka-driven pre-aggregation reduced DB load and allowed real-time updates across dynamically changing user hierarchies.

Delivery Velocity And Predictability Improved

- Every sprint now ends with frontend-ready, QA-verified component demos, increasing delivery confidence and enabling better stakeholder alignment.

- Integration rework and QA triage efforts dropped significantly as API-first planning and cross-functional pods took full ownership.

Project Highlights

-

Sub-Second API Latencies at Scale

-

Streaming Rewrite For Platform Stability

-

AWS Governance With Control Tower

-

Event-Driven Aggregation For Real-Time Dashboards

Sub-Second API Latencies at Scale

Axelerant introduced structured performance profiling, rewrote core backend services in Go, and optimized database access with targeted indexing and payload decoupling:

- Backend throughput scaled from 140 RPS to 560+ RPS, validated under live load simulations.

- Key transactional endpoints (e.g., market transactions, odds fetch) were reduced from 2.3s P99 latency to <250ms.

- Multiple layers of latency mitigation were applied, including caching of hierarchical lookups and compression of API payloads.

-1.webp)

Streaming Rewrite For Platform Stability

The streaming system was completely rebuilt using Go, enabling:

- A single persistent connection via WebSockets with goroutine based handlers for each market stream.

- Memory reduction from 6 GB to 150 MB, and CPU usage from 2.5 cores to 0.1 core per pod.

- Smooth support for 20,000+ concurrent live markets with no dropped connections or missed updates, even under active load.

- Built-in metrics exposed internal state (queue lag, message time skew) for proactive recovery.

AWS Governance With Control Tower

The platform's infrastructure was migrated into a multi-account, highly governed AWS setup:

- Development, production, logging, and shared services were fully isolated.

- Centralized logging, role-based access, and policy guardrails reduced surface area and improved operational clarity.

- Account-specific billing and tagging improved cost attribution per environment and per service.

- Guardrails like AWS Config, and CloudTrailwere activated organization-wide for compliance enforcement.

-1.png)

Event-Driven Aggregation For Real-Time Dashboards

The legacy synchronous aggregation architecture was replaced with a Kafka-based event pipeline:

- Users, bonuses, and referral earnings are now pre-aggregated in real time and stored in durable Redis snapshots.

- Dashboard queries became lightweight reads instead of heavy nested joins.

- A live hierarchy sync mechanism was built to automatically propagate structural changes (e.g., user level changes, new agents).

- Admin dashboards now load in <1 second, even with over 250 concurrent agents and thousands of downstream users querying simultaneously.

-2.png)

Get in touch.

Send us a message and connect with one of our brand consultants to find out exactly how we can help you.