Introduction

A distributed system is a collection of computer programs that work together to achieve a common goal. For example, Spotify uses a distributed network of servers to store and deliver music and podcasts to its users.

But if one of these computer programs stops working, it leads to outages. According to the Uptime Institute Global Data Center Survey, 80% of data center managers and operators have experienced some type of outage in the past three years. Over 60% of these failures result in total losses of at least $100,000.

Distributed systems need to be more resilient to ensure a decrease in these outages.

8 Strategies For Building Resilient Distributed Systems

Resilience strategies provide a foundation for building robust, scalable, and reliable distributed applications and services.

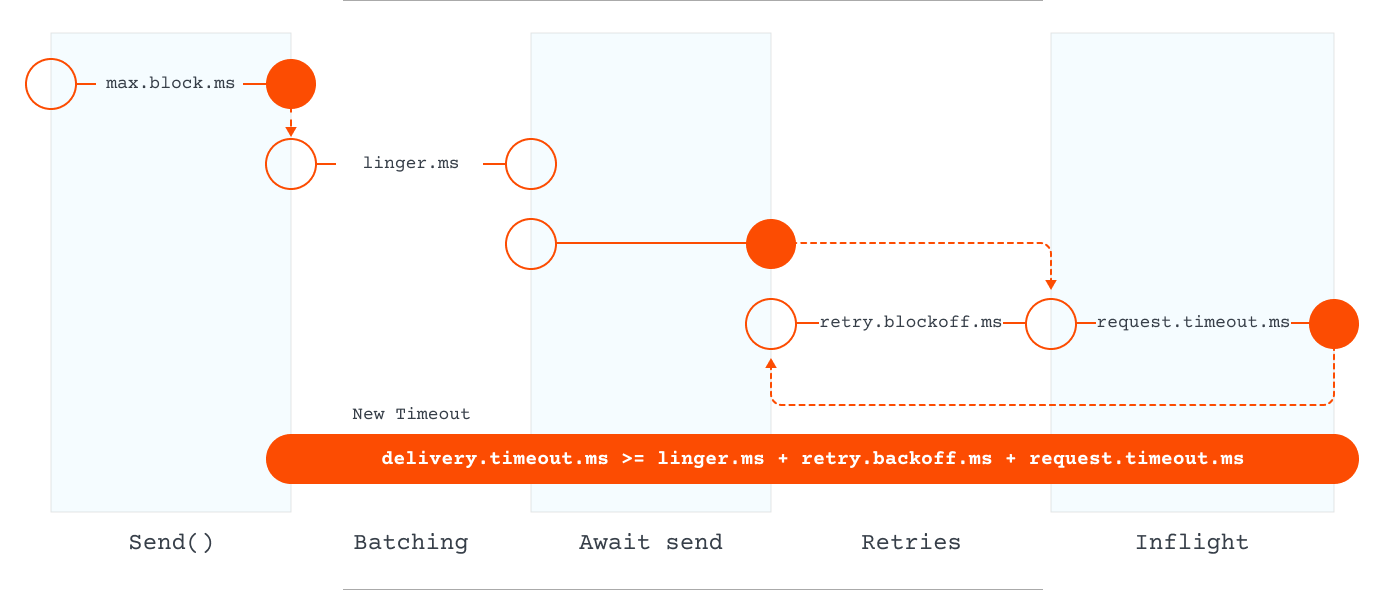

1. Timeouts And Automatic Retries

Timeouts set a maximum time limit for completing a request. If the request takes longer than the specified time, it is considered a failure. Timeouts prevent a system from waiting indefinitely for a response, help free up resources, and prevent bottlenecks.

Assuming that the failure is due to a temporary issue, automatic retries resend a failed request after a predefined time. This strategy helps handle transient failures, such as network glitches or temporary unavailability of a service, by allowing the system to recover and complete the operation.

If implemented incorrectly, retries can amplify small errors into system-wide outages.

When setting up retries, developers must define a maximum number of retry attempts before conceding. But, configuring retries in this manner poses two significant challenges.

Challenges In Configuring Retries

Guessing The Maximum Number Of Retries

Ensuring an impactful number of retries is crucial. Selecting more than one retry attempt is generally wise while allowing several retry attempts is prudent.

However, permitting too many retry attempts can result in a surge of additional requests, imposing an extra load on the system. Excessive retries can also significantly elevate the latency of requests.

Developers can make an educated guess, choose a maximum retry attempt number, and fine-tune it through trial and error until the system exhibits the desired behavior.

Vulnerabilities With Retry Storms

A retry storm occurs when a particular service encounters a higher-than-normal failure rate, prompting its clients to reattempt the failed requests. The additional load generated by these retries increases the failure rate, further slowing down the service and leading to even more retries.

If each client is set to retry up to three times, this can result in a fourfold increase in the number of requests sent. To compound the issue further, if any of the clients' clients are configured with retries, the number of retries multiplies, potentially transforming a small number of errors into a self-induced denial-of-service attack.

Solution: Optimize Using Retry Budgets

To avoid the challenges posed by retry storms and the arbitrary assignment of retry attempt numbers, retries can be configured through retry budgets. Instead of setting a fixed maximum for the number of retry attempts per request, a service mesh monitors the ratio between regular requests and retries, ensuring it stays below a configurable limit.

For instance, users might indicate a preference for retries to introduce no more than a 20% increase in requests. The service mesh will then conduct retries while adhering to this specified ratio.

Configuring retries invariably involves balancing enhancing success rates and avoiding excessive additional load on the system. Retry budgets make this trade-off explicit by enabling users to specify the amount of additional load the system is willing to tolerate from retries.

2. Deadlines

A deadline is a specified moment beyond which a client is unwilling to wait for a response from a server. Efficient resource utilization and latency improvements are achieved when clients avoid unnecessary waiting and servers know when to cease processing requests.

When an API requests a deadline, it establishes a point in time by which the response should be received, with the timeout indicating the maximum acceptable duration for the request. Here’s how implementing deadlines works on the client side and server side.

Deadlines On The Client Side

Remote Procedure Call (RPC) frameworks, such as gRPC, leave the deadline unset, allowing a client to potentially wait indefinitely for a response. To prevent this, it is essential to explicitly set a realistic deadline for clients.

Setting an appropriate deadline involves making an educated guess based on system knowledge validated through load testing. If a server exceeds the set deadline while processing a request, the client will terminate the RPC, signaling a DEADLINE_EXCEEDED status.

Deadlines On The Server Side

Servers may receive requests with unrealistically short deadlines, risking insufficient time for a proper response. In such cases, a gRPC server automatically cancels a call (resulting in a CANCELLED status) once the client's specified deadline has passed.

The server application must halt any spawned activity servicing the request. Long-running processes should periodically check if the initiating request has been canceled, prompting a halt in processing.

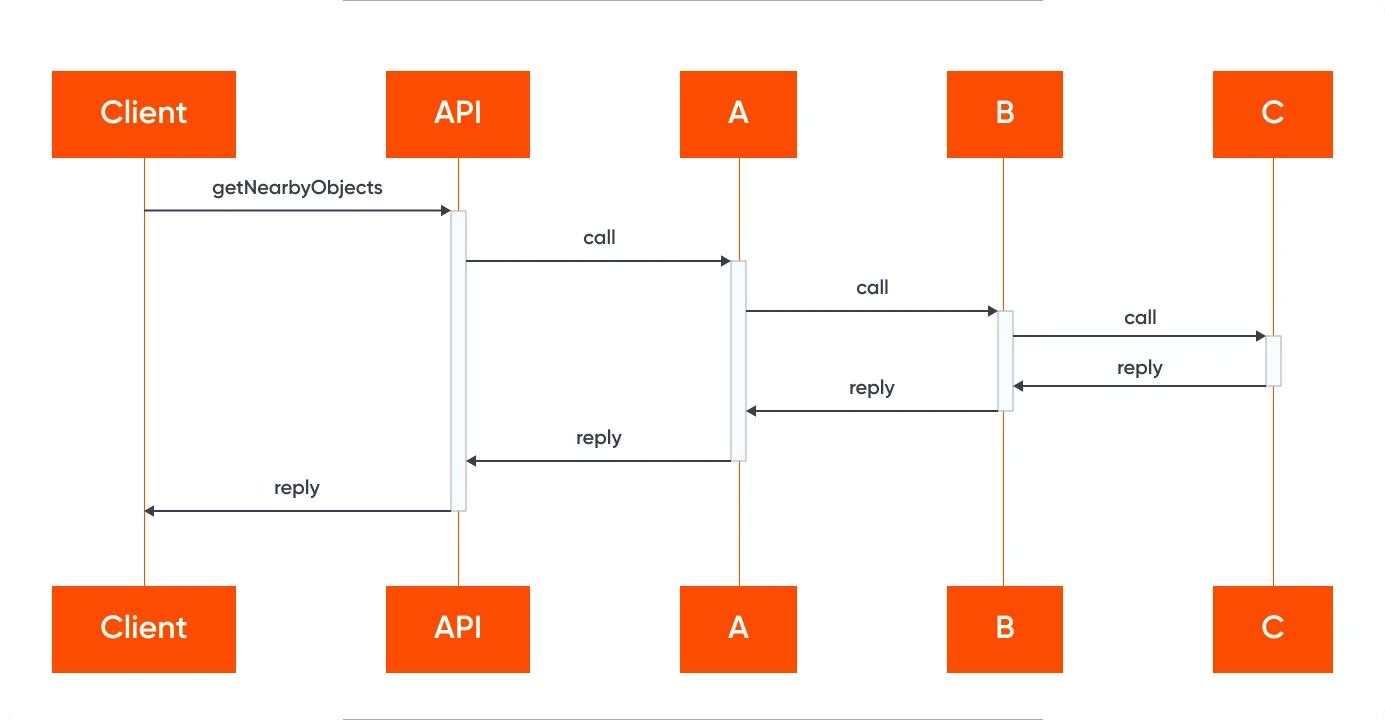

Deadline Propagation

In scenarios where a server needs to call another server to generate a response, propagating the deadline from the original client becomes important. Some gRPC implementations support the automatic propagation of deadlines from incoming to outgoing requests.

While this is the default behavior in certain languages (like Java and Go), it may need explicit enabling in others (like C++). Propagating the deadline involves converting it into a timeout, accounting for the already elapsed time. This mitigates potential clock skew issues between servers, ensuring a reliable system operation.

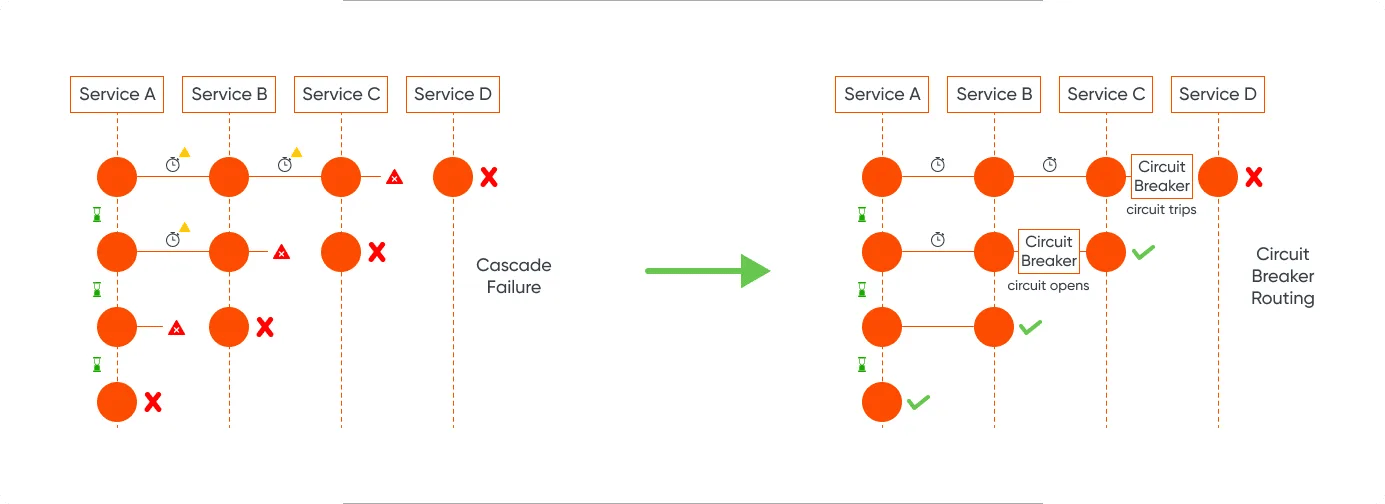

3. Circuit Breakers

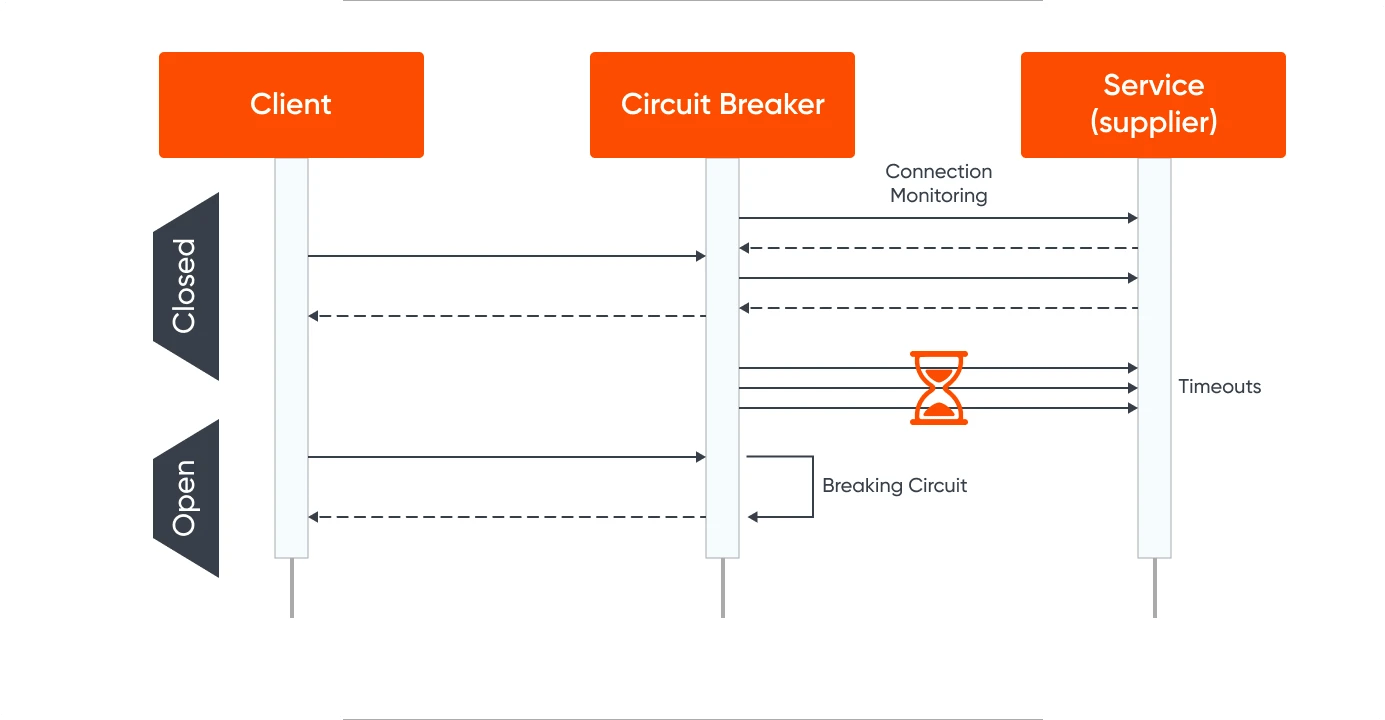

Circuit breakers are a mechanism for preventing a system from making requests that are likely to fail. They avoid unnecessary load on a failing service and allow it time to recover.

When a certain number of consecutive failures or timeouts occur, the circuit breaker ‘trips’ and stops sending requests. After a cooldown period, the circuit breaker may enter a ‘half-open’ state, allowing a limited number of requests to check if the service has recovered before fully resuming normal operation.

Avoid using circuit breakers as a substitute strategy for building resilient distributed systems. This strategy should also be avoided when dealing with access to local private resources within an application, such as an in-memory data structure. Employing a circuit breaker in this environment would introduce unnecessary overhead to the system.

How To Implement A Circuit Breaker/Proxy

A circuit breaker serves as a proxy for operations prone to failure, monitoring recent failure occurrences to determine whether to permit the operation to proceed or immediately return an exception.

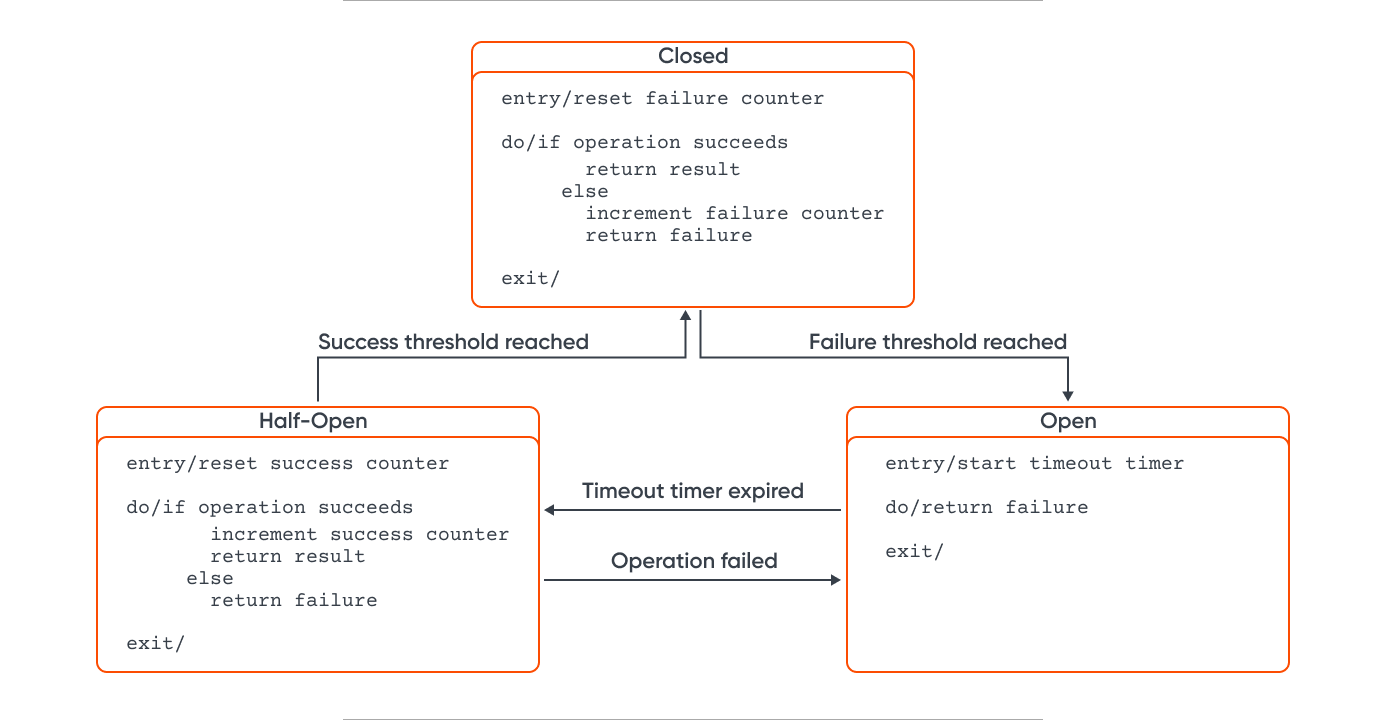

The proxy can be implemented as a state machine with three states mirroring electrical circuit breaker functionality.

Closed

Application requests are directed to the operation. The proxy tallies recent failures and, if unsuccessful, increments the failure count. The proxy enters the Open state if failures surpass a specified threshold within a given period.

A timeout is initiated, and upon expiry, the proxy transitions to the Half-Open state. The timer allows the system to rectify the underlying issue before the application retries.

Open

Application requests fail instantly, and an exception is returned.

Half-Open

A limited number of requests pass through to invoke the operation. If successful, the circuit breaker assumes the fault causing the previous failures has been rectified and switches to the Closed state, resetting the failure counter. Any failure reverts the circuit breaker to the Open state, restarting the timeout to provide additional recovery time.

External mechanisms handle system recovery, such as restoring or restarting a failed component or repairing a network connection.

The Circuit Breaker pattern ensures system stability during recovery, minimizing performance impact. It rejects potentially failing operations, maintaining system response time. Event notifications during state changes aid in monitoring system health, and customization allows adaptation to different failure types.

For instance, the timeout duration could be adjusted based on the severity of the failure or returned to a meaningful default value in the Open state instead of raising an exception.

4. Redundancy And Replication

In distributed computing, a diverse array of computing resources exists, such as containers, nodes, and clusters, giving rise to numerous elements that can be replicated. Redundancy may encompass various forms of external resources.

For instance, redundancy manifests as repeated calls to the same function from different locations in the system or the duplication of references pointing to identical data. Redundancy is also evident in duplicated execution time within a function, surplus memory, files, or processes.

Within a distributed system, nodes can refer to an extra memory segment, file (such as a database), or process. A redundant node is any node that isn't indispensable for the correct functioning of the distributed system. Simply put, any node contributing beyond the bare minimum functionality of the system is considered extraneous and redundant.

In a distributed system, there are numerous replicated functions and components. While adding nodes to a system helps the system perform better, not every added node is essential for the system.

Why Are Redundant Notes Introduced?

Transitioning from the concept of redundancy to replication helps answer this question.

While redundancy facilitates the duplication of components within our system, it isn't inherently beneficial. Redundancy fails to be advantageous if a redundant node falls out of sync with the original node from which it was copied.

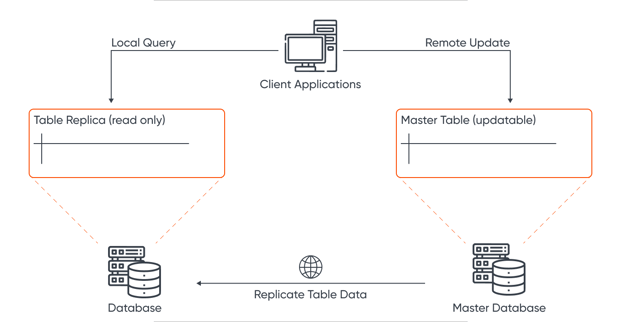

Replication can be viewed as a subset of redundancy. Both involve creating redundant nodes within a system. But replication takes the idea of a redundant node a step further by ensuring that the redundant node (or replica) is identical to all other copies.

At first glance, this might seem straightforward; users might assume that a node copied from somewhere else will be identical to its original.

Not necessarily.

Consider a scenario where a node is copied, creating a redundant one. If later something about the original node is modified—perhaps its state, a value it contains, or internal behavior—what happens to the redundant node?

Nothing.

If a simple redundant node is created, there is nothing in place within the system to ensure that the redundant node will:

be aware of the change and

update itself or be updated by someone else to reflect the correct state.

Replication addresses this issue by ensuring that all replicas of a node are identical to one another and match the original node from which they were replicated.

Replication is also closely tied to transparency. With each added replica in a system, the rest of the system should continue functioning correctly, unaware of any created replicas.

If a replica is updated, the rest of the system need not be aware of it, and if a node is replicated, the rest of the system need not concern itself. For example, if a web server or a database node is replicated, end users of the distributed system shouldn't be aware of whether they are interacting with a replica of the original node.

When transparency is maintained in a system, replication provides numerous benefits:

Reliability: Replication makes the system more reliable. If one node fails and replicas are in place, a replica can step in to perform the role of the failing node, enhancing fault tolerance.

Availability: Having replicas makes the system more available. With backup replicas, the system experiences minimal downtime as these replicas can step in to replace a failing node.

Performance: Replication enhances system performance by enabling more work, serving more requests, and processing more data. This results in a faster system reduced latency, and the ability to handle a high throughput of data.

Scalability: Replication makes the system easier to scale. With more replicated nodes, adding databases, servers, or services as needed becomes simpler. Replicating nodes in different continents or countries also facilitates geographic scaling.

Many distributed systems leverage this concept to address reliability, performance, and fault-tolerance challenges.

5. Graceful Degradation

Graceful degradation is the ability of a system to maintain basic functionality even when some components or services are not fully operational. This might involve prioritizing essential services during high-load or failure scenarios.

For example, during a traffic spike, a system might prioritize serving core features while temporarily disabling non-essential functionalities.

Best Practices To Implement Graceful Degradation

When designing systems for resiliency and graceful degradation, consider the following four established practices listed in order of their impact on users.

Shedding Workload

When demand exceeds a distributed system's capacity, load shedding can be employed. In this scenario, some requests, such as API calls, database connections, or persistent storage requests, are dropped to prevent potential damage.

It's crucial to carefully decide which requests to drop, considering factors like service levels and request priorities. For instance, health checks may take precedence over other service requests.

Time-Shifting Workloads

When the system faces situations where shedding workloads is not possible, developers can use time-shifting workloads. This method allows for asynchronous processing by decoupling the generation of a request from its processing.

Developers can use message queueing tools like Apache Kafka or Google Cloud Pub/Sub to buffer data in asynchronous processing. However, developers must consider the scope of transactions, especially when spanning multiple services.

Reducing the Quality of Service

When shedding or time-shifting workloads is not desirable but reducing the load on the system is necessary, developers may opt to temporarily lower the quality of service. For instance, one can limit available features or switch to approximate database queries instead of deterministic ones. This approach ensures that all requests are serviced, avoiding the need for time delays associated with time-shifting.

Adding More Capacity

The ideal approach, especially from a customer experience perspective, is to avoid shedding, time-shifting, or degrading services. Instead, adding capacity is often the best solution for handling workload spikes.

Cloud providers offer features like autoscaling Virtual Machines (VMs) and infrastructure components, while Kubernetes can automatically scale pods based on workload changes. Manual intervention is rarely required unless the pool of available resources is exhausted, such as during a zone failure in a public cloud.

Advanced capacity planning and proactive monitoring can significantly contribute to the creation of more resilient services.

6. Chaos Engineering

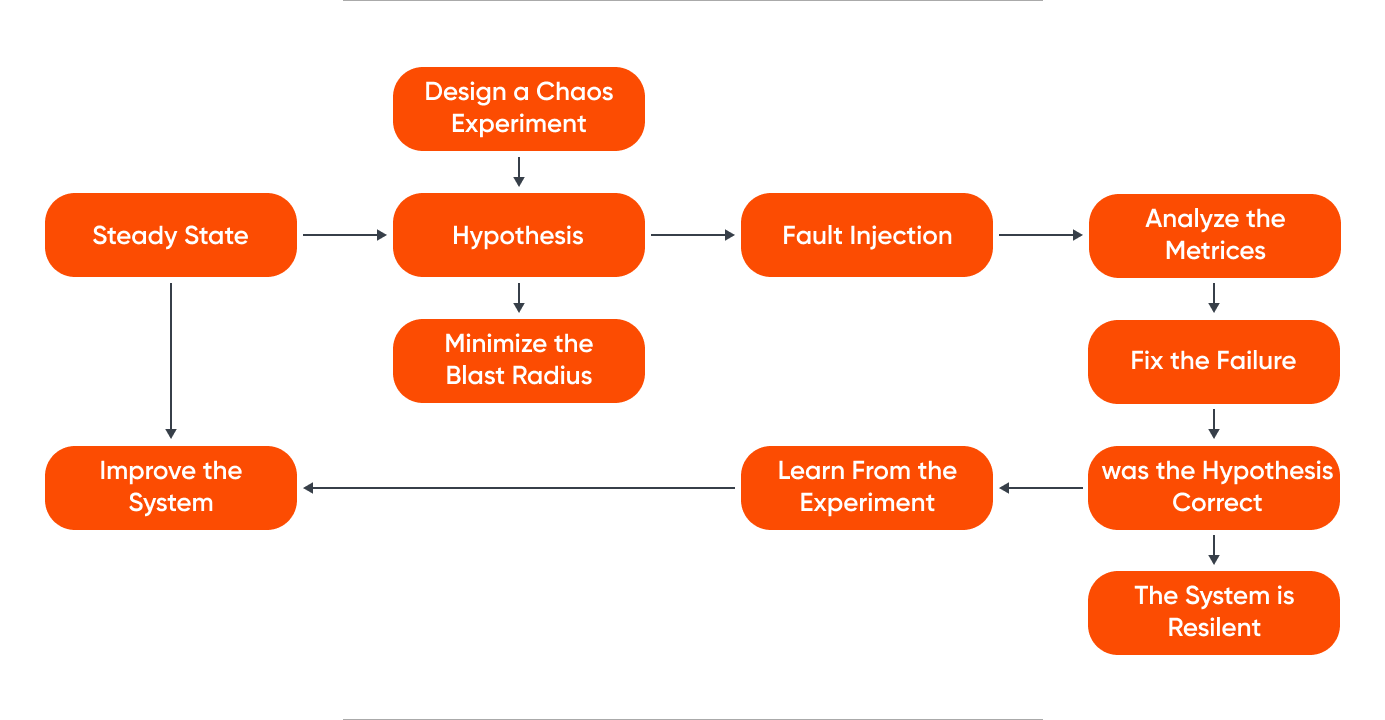

Chaos Engineering is the practice of intentionally injecting failures or disruptions into a system to observe how it responds and to identify weaknesses before they cause significant problems. It involves controlled experiments, such as introducing network latency, simulating server failures, or manipulating dependencies.

The goal is to proactively uncover vulnerabilities and enhance system resilience. This approach involves systematically testing distributed systems to verify their resilience in the face of turbulent conditions and unforeseen disruptions. It is especially relevant for large-scale, distributed systems.

Principles Of Chaos Engineering

Chaos engineering is essentially experimentation to understand how a distributed system responds to failures. By formulating hypotheses and attempting to validate them, chaos engineering allows for continuous learning and the discovery of new insights about the system.

Chaos engineering delves into scenarios that typically fall outside the scope of regular testing, exploring factors that extend beyond the conventional issues tested for. The following principles serve as a foundation for conducting chaos engineering experiments.

Plan The Experiment

Meticulously plan and identify potential areas of failure. Understanding the system's normal behavior is crucial in determining what constitutes a baseline state. Next, formulate a hypothesis about how system components will behave under adverse conditions. Create control and experimental groups. and define metrics, such as error rates or latency.

Design Real-World Events

Outline and introduce real-world events that could disrupt the system. These events mirror actual scenarios, such as hardware failures, server issues, or sudden traffic spikes. The goal is to simulate disruptions that could impact the system's steady state.

Run The Experiment

After establishing the normal behavior and potential disruptive events, experiments are conducted on the system, preferably in a production environment. The goal is to measure the impact of failures and gain insights into the system's real-world behavior.

The ability to prove or disprove the hypothesis enhances confidence in the system's resilience. To mitigate risks, it's crucial to minimize the blast radius during production experiments. Gradually increasing the radius, if successful, ensures controlled implementation, with a rollback plan in place in case of unforeseen issues.

Monitor Results

The experiment's results offer a clear understanding of what is functioning well and what requires improvement. By comparing the control and experimental groups, teams can identify differences and make necessary adjustments.

This monitoring process helps identify factors contributing to outages or disruptions in service, enabling teams to make informed enhancements.

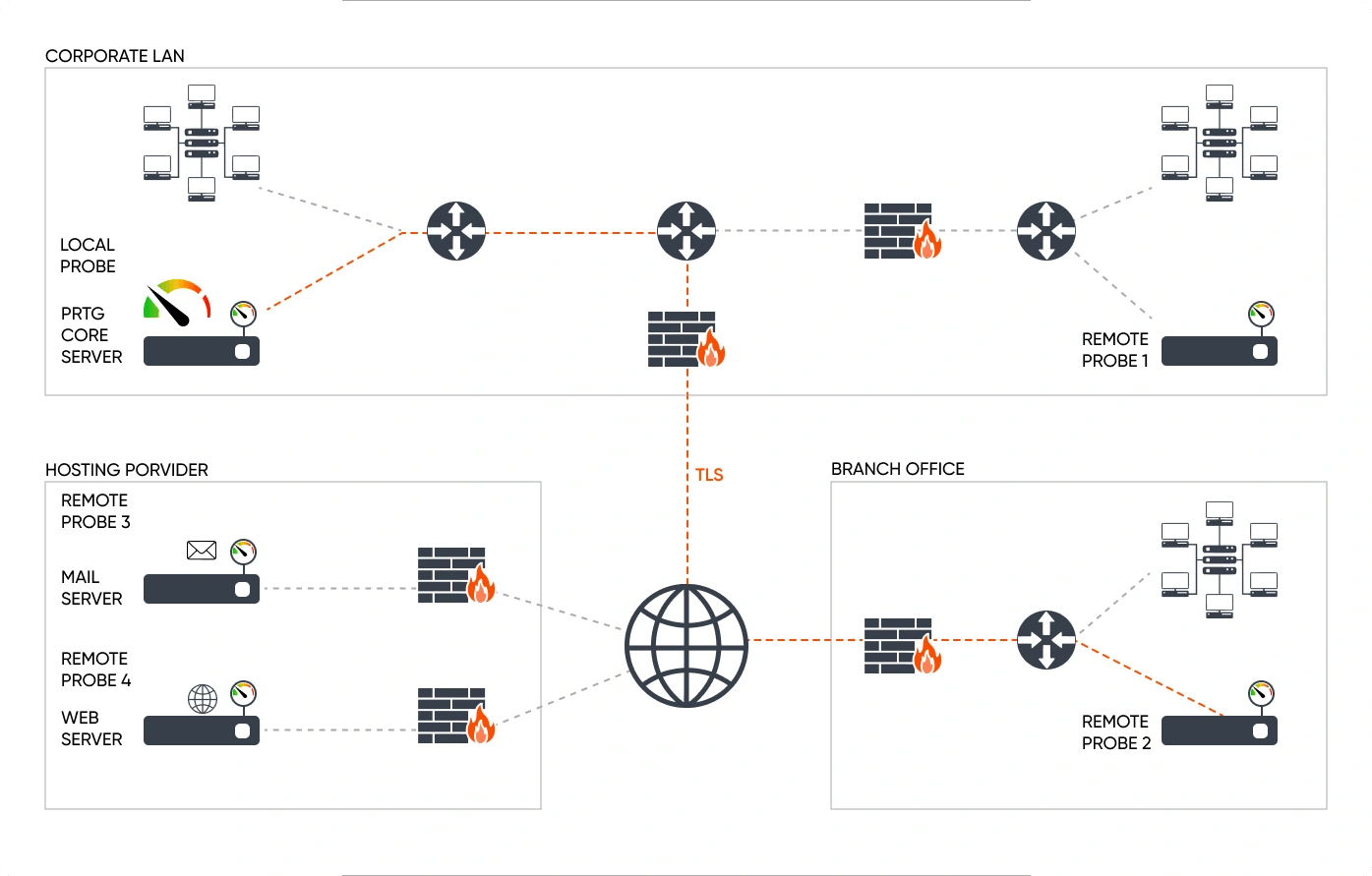

7. Health Checks And Monitoring

Health checks include periodic assessments of the state and performance of system components. They refer to probing services that verify their responsiveness, check resource utilization, and assess overall system health.

Monitoring involves continuous observation of various metrics and logs to detect anomalies, performance issues, or failures. Monitoring tools collect and analyze data on system behavior, resource usage, and error rates. Alerts can be set up to notify administrators or automated systems when predefined thresholds are exceeded, allowing for a rapid response.

Types of Monitoring In Distributed Systems

There are different types of distributed systems monitoring.

Application-Level Monitoring: This type of monitoring centers on the performance of the system's software, enabling the detection of issues such as slow response times or errors.

Infrastructure-Level Monitoring: It concentrates on both hardware and software, aiding in the identification of problems like overloaded servers or network latency.

Both are indispensable for the effective management of distributed systems.

Various aspects of a distributed system can be monitored, including hardware, software, and networks. Hardware monitoring ensures the proper functioning of physical components like servers and storage devices. Software monitoring ensures the correct operation of applications, including databases and web servers.

How To Implement Distributed Systems Monitoring

Follow the steps given below to implement distributed system monitoring.

Step 1: Define KPIs

Identify metrics to track

Determine the frequency of data collection

Step 2: Set Up Monitoring Infrastructure

Choose appropriate tools

Deploy the monitoring infrastructure

Step 3: Collect And Analyze Data

Utilize the infrastructure to gather and analyze data.

Apply insights to troubleshoot issues or enhance performance.

Best Practices To Leverage Logging For Distributed Systems Management

Logging serves as a valuable data collection method for distributed systems monitoring, capturing information about system events, user logins, or errors. There are two main types of logs:

System Logs: These are generated by the operating system to help detect system errors.

Application Logs: These are generated by software applications to identify application errors and slow response times.

Logging provides crucial capabilities for efficiently managing distributed systems by:

Recording system events for troubleshooting.

Identifying, diagnosing, and addressing errors.

Offering insights into system performance for optimization.

To streamline logging processes, consider the following best practices:

Utilize a Log Management Tool: Tools like Retrace, Rapid7 Insight IDR (Formerly Logentries), GoAccess, and Logz.io can efficiently index and search log data.

Set Up Alerts: Receive notifications for specific events or threshold breaches.

Implement Log Aggregation: Collect log data from multiple servers for extensive distributed systems.

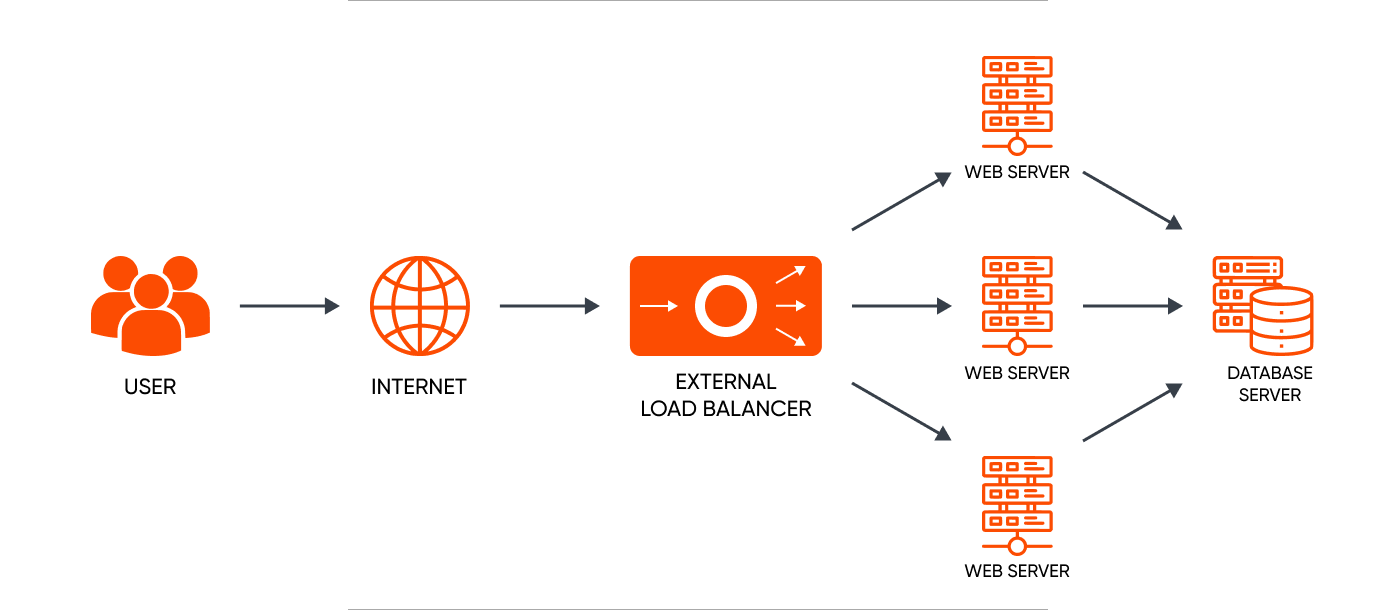

8. Load Balancing

Load balancing distributes incoming network traffic or workload across multiple servers to ensure no single resource is overwhelmed. This helps optimize resource utilization, throughput, and fault tolerance and minimize response time. Through load balancing, organizations can scale horizontally and add more nodes to the system.

How Load Balancers Operate

Load balancers can take various forms, including:

Physical devices

Virtualized instances running on specialized hardware

Software processes

Application delivery controllers

Load balancers employ diverse algorithms for distributing the load on server farms, such as round-robin, server response time, and the least connection method. These devices detect the health of backend resources and direct traffic only to servers capable of satisfying requests.

Whether hardware or software and irrespective of the algorithm used, load balancers ensure an equitable distribution of traffic across different web servers. This prevents any single server from becoming overloaded, ensuring reliability.

Efficient load-balancing algorithms are especially crucial for cloud-based eCommerce websites. Often likened to a traffic cop, load balancers route requests to the appropriate location, averting bottlenecks and unforeseen incidents.

Load balancing is a scalable approach for supporting the numerous web-based services in today's multi-device, multi-app workflows. Coupled with platforms enabling seamless access to various applications and desktops in modern digital workspaces, load balancing ensures a consistent and reliable end-user experience.

Conclusion

The increasing cost of downtime highlights the critical need for resilient distributed systems to build apps. By using strategies like circuit breakers and redundancy, businesses strengthen their applications and handle potential issues in advance.

Graceful degradation ensures continued functionality in tough situations, while health checks and monitoring identify and address problems on time. In the dynamic world of distributed systems, prioritizing reliability through these strategies is essential.

These strategies not only protect against unexpected problems but also make the computing environment more adaptable, scalable, and user-friendly.

Schedule a call with our experts to learn more about how we can help you leverage these strategies to build resilient distributed systems.

Bassam Ismail, Director of Digital Engineering

Away from work, he likes cooking with his wife, reading comic strips, or playing around with programming languages for fun.

Hanush Kumar, Marketing Associate

Hanush finds joy in YouTube content on automobiles and smartphones, prefers watching thrillers, and enjoys movie directors' interviews where they give out book recommendations. His essential life values? Positivity, continuous learning, self-respect, and integrity.

We respect your privacy. Your information is safe.

We respect your privacy. Your information is safe.

Leave us a comment